I Love You, Tweet-Preprocessor

This is going to be a short article, but I really wanted to share a pretty neat library named tweet-preprocessor that I stumbled upon while reading some random stuff on Hacker News.

The cool bit

I have a confession to make: I have never been able to remember anything about regular expressions. To this day, I still struggle to implement even the most basic character filtering routine. I find myself having to go through the same traumatising process each and every time. I pass some arguments into re.compile(), then try to pass re.findall() through a lambda function to substract whatever it is that I’m trying to get rid of. And it sometimes work systematically fails. That usually takes me to StackOverflow or some similar website. I find some code snippet that seems to do the job, I paste it into my own code, run the script, but get an deprecation error, then get back to the StackOverflow post, and realise that it dates back to 2011.

But that was before. I have now discovered tweet-preprocessor and my existence feels complete.

According to its PyPi repo, the library supports:

- Cleaning

- Tokenizing

- Parsing

- URLs

- Hashtags

- Mentions

- Reserved words (RT, FAV)

- Emojis

- Smileys

- JSON and .txt files

Some examples

If you are using Google Colab (and you should!), you can easily install Python any library by simply placing an exclamation mark just before your pip install. You will of course have to reinstall the library each time you reboot your runtime.

pip install tweet-preprocessor

We can now import preprocessor, pandas, and create some random tweets.

import pandas as pd

import preprocessor as tp

tweets = {

"text": [

"#regularexpressions suck but #perl is awesome",

"Hey @elonmusk what's up?",

"This is awesome https://pypi.org/project/tweet-preprocessor/"

]

}

df = pd.DataFrame(tweets)

df

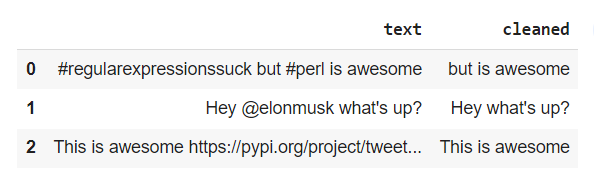

The first method we can use is .clean(), which will automatically remove pretty much anything Twitter-specific:

df["cleaned"] = df["text"].apply(lambda x: tp.clean(x))

df

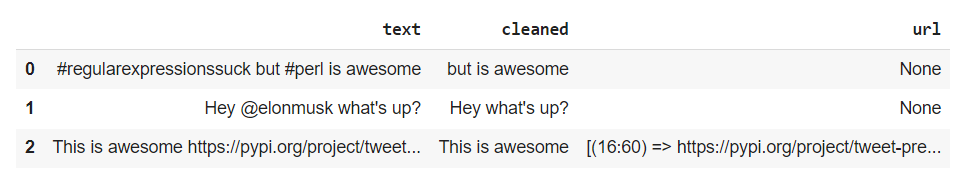

To be fair, even it only did that, tweet-preprocessor would already be worth installing. But guess what, it also supports the extraction of retweets, favorites, hashtags, and urls!

df["url"] = df["text"].apply(lambda x: tp.parse(x).urls)

df

As can be seen in the screenshot above, .parse().urls will return not only the urls that are present within a given string, but the position at which the url starts, and the position at which it ends.

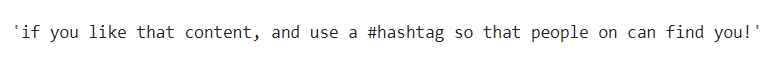

Last but not least, it is also possible to choose what to remove by passing sone parameters through the .set_options() method.

text = "@RT if you like that content, and use a #hashtag so that 99999999 people on https://twitter.com can find you!"

tp.set_options(tp.OPT.URL, tp.OPT.MENTION, tp.OPT.NUMBER)

tp.clean(text)

How awesome is that?