BM25: An Alternative to TF-IDF in JavaScript Using WinkNLP

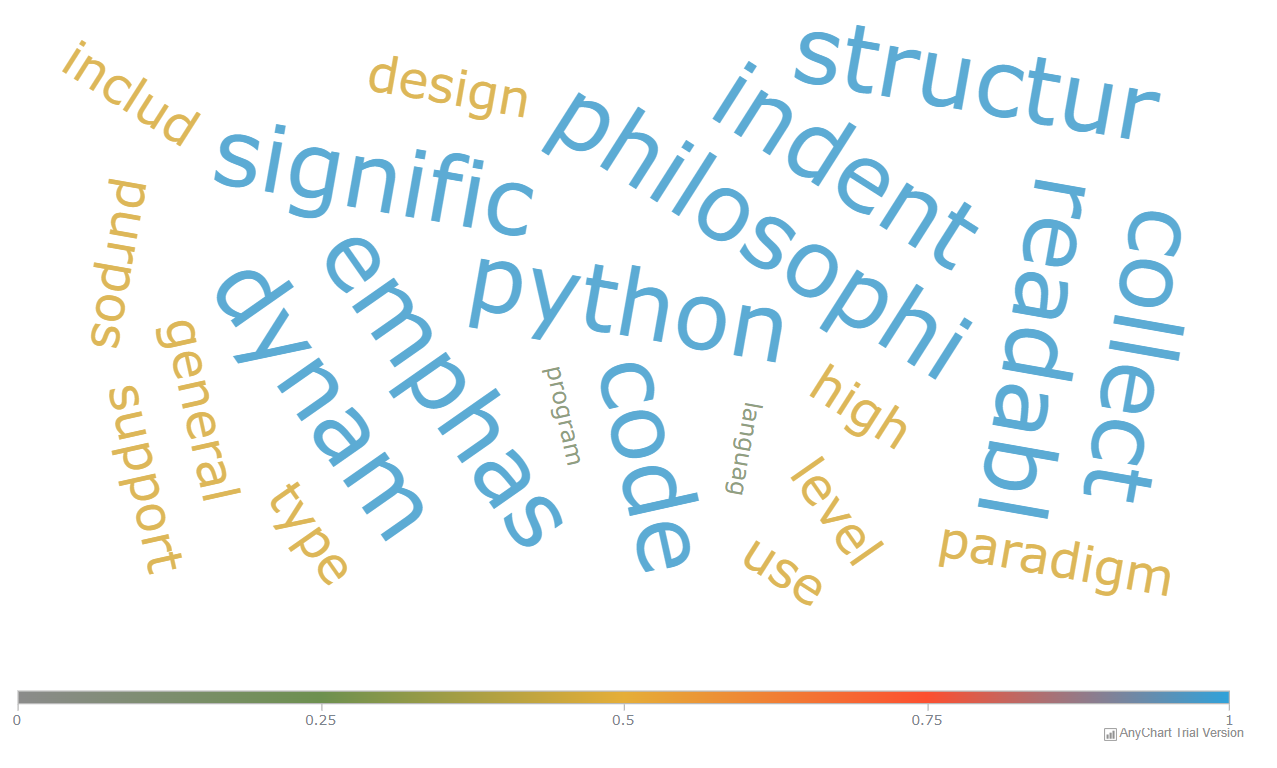

Below is an example of what we’ll be doing in this article:

The JavaScript ecosystem frequently receives a fair amount of criticism for being a bit of a mess, with poorly maintained packages and new front-end or back-end frameworks appearing almost every month.

However, if you’re a data science practioner, the past few years have seen JavaScript slowly rise as a perfectly viable option for a second programming language to learn. Whether you want to get familiar with data visualization (D3.js, AnyChart, etc..) or data manipulation (Danfo.js or Arquero.js) packages, you’re almost guaranteed to find an active and well-maintained project that suits your needs.

And quite logically, the field of natural language processing has also seen the development of several very comprehensive libraries over the past three or four years, with packages like winkNLP and Compromise.js offering a real alternative to their Python or R counterparts.

Today’s article is going to focus on winkNLP specifically, one of the most popular and intuitive nlp packages for Node.js.

From TF-IDF to BM25

If we want to understand the content of a set of document (aka a corpus), we probably need to measure how important the words within each of these document are. To do so we can’t solely look at the frequency of each term, as some terms are probably going to occur a lot, like “the”,“a”,“and”, etc.. To avoid that issue, the following techniques are commonly used:

-

TF-IDF is basically a ranking function that multiplies the term frequency (TF), that is to say the number of occurences of a term within the document divided by the total words in the document, and the inverse document frequency (IDF), which is going to return a higher value for words that aren’t commonly found across all documents.

-

BM25, also known as Okapi BM25, helps normalise this score. At a high, level BM25 also calculates the TF and IDF. But though BM25 is a far less popular technique, it addresses some of the limitations of TF-IDF. As the frequency of the terms found within a document increases, their score increases linearly. Say a document that comprises a total of 1,000 words features the term “JavaScript” 10 times. Now, imagine another document within our corpus, that also comprises 1,000 words, but features the term “JavaScript” 20 times. The TF-IDF score for “JavaScript” for the second document would basically be twice as large as the one for the first document. And yet, this doesn’t mean that we should really consider this second document to be twice as relevant as the first one. Behind the scene, BM25 will provide a combined weight for each term within the documents, ensuring that the TF for these terms isn’t too strong.

For a more in-depth overview of how BM25 works, I highly recommend you to read this article as well as this research paper.

Besides, please note that if you want a production-ready and efficient way of computing the importance of terms within a corpus, you might also want to have a look at more recent techniques such as Word2Vec.

A crash course in winkNLP

There are several Node packages that can be used to output either the TF-IDF or BM25 scores for a given collection of documents. I have for instance used a library named Tiny-TF-IDF, which also provides a set of methods to calculate the pairwise similarity between a collection of documents and that we might explore in a future article.

But let’s go back to winkNLP for now and see how we can get started:

const winkNLP = require("wink-nlp");

const model = require("wink-eng-lite-web-model");

const its = require("wink-nlp/src/its.js");

const as = require("wink-nlp/src/as.js");

If you’re familiar with the spaCy library in Python, the basic syntax for winkNLP should look pretty familiar to you. After loading the main package and its helpers, we are required to load a language model, in this case the default language which happens to be the light weight English language model. The its and as variables are helpers, which we will be using to extract item properties and reduce a collection of documents.

Here’s how we can simply split a sentence by tokens and print them to the console:

let text = "Oh hello!";

const nlp = winkNLP(model);

const doc = nlp.readDoc(text);

console.log(doc.tokens().out());

What we did above is, I think, pretty straightforward:

winkNLP()instantiated the winkNLP packagereadDoc()transformed the input text string into a winkNLP document.itsis a “helper”, which winkNLP uses to extract item propertiesout()is a method that returns either an arrays of strings or a JavaScript object

We are now ready to write something a bit more complicated, but that arguably provides more value than just outputting a simple list of tokens:

const getNLP = (data) => {

const text = data;

const nlp = winkNLP(model);

const doc = nlp.readDoc(text);

let keywords = doc.tokens().out();

let result = {

"Token": [],

"Tag": [],

"Lemma": [],

"Stopword": []

};

for (let i = 0; i < keywords.length; i++) {

result["Token"].push(doc.tokens().out(its.value)[i]);

result["Tag"].push(doc.tokens().out(its.pos)[i]);

result["Lemma"].push(doc.tokens().out(its.lemma)[i]);

result["Stopword"].push(doc.tokens().out(its.stopWordFlag)[i]);

}

return result;

};

const getResults = (data) => {

let processed_text = getNLP(data);

for (let p in processed_text) {

console.log(p + "\n\t", processed_text[p].join(" / "));

}

}

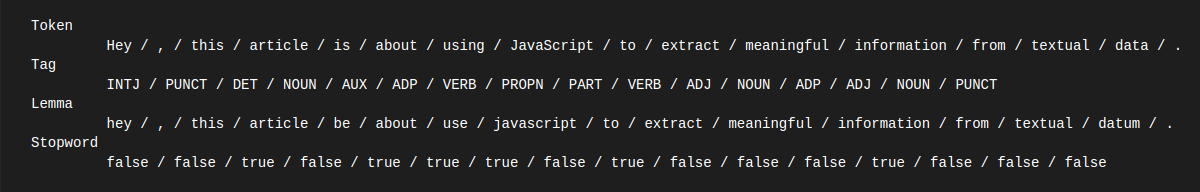

let text_to_process = "Hey, this article is about using JavaScript to extract meaningful information from textual data.";

getResults(text_to_process);

Though the code above is again fairly simple, let’s go through it bit by bit:

- We first created a placeholder Javascript object and assigned it to a

resultvariable - We then looped through the tokens that are contained within our document, and pushed different

itshelpers (the actual token, its POS tag, its lemmatized form, and whether or not it is considered a stopword) - Finally, the

getResults()function is just an entirely optional way of outputting to the console the now transformedresultobject.

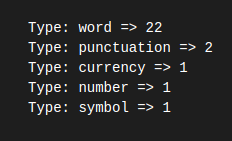

One last pretty cool thing we can do, is leverage *winkNLP’s .freqTable helper to count the various types of terms within a document:

let text = "Julien's website has seen a 60% week on week increase between August and October, but he's not making any $ with it."

const getFreq = (data) => {

const nlp = winkNLP(model);

let doc = nlp.readDoc(data);

doc.tokens()

.out(its.type, as.freqTable)

.forEach(e => {console.log(`Type: ${e[0]} => ${e[1]}`)})

}

getFreq(text);

You can find a comprehensive list of useful helpers here (sentiment, bigrams, contractions, etc..).

Now, this is pretty neat, but it doesn’t have anything to do with the main topic of this article. So let’s move on to our BM25 algorithm!

Vectorizing documents with the BM25 algorithm

What we need first, is create a sample corpus that we can fit our algorithm to. To keep things simple, our corpus will contain a total of three documents, which in all fairness are just a bunch of sentences I copied from Wikipedia:

const corpus = [

"Python is a high-level, general-purpose programming language. Its design philosophy emphasizes code readability with the use of significant indentation. Python is dynamically-typed and garbage-collected. It supports multiple programming paradigms, including structured, object-oriented and functional programming.",

"JavaScript, often abbreviated as JS, is a programming language that is one of the core technologies of the World Wide Web, alongside HTML and CSS. As of 2022, 98% of websites use JavaScript on the client side for webpage behavior, often incorporating third-party libraries.",

"Ruby is an interpreted, high-level, general-purpose programming language which supports multiple programming paradigms. It was designed with an emphasis on programming productivity and simplicity. In Ruby, everything is an object, including primitive data types."

];

As you have probably guessed, our next step it to require the appropriate model from winkNLP’s utilities package, and then instanciate the vectorizer. We’ll be using the default parameters (to learn more about supported parameters for the BM25 vectorizer, please refer to the official documentation:

const BM25Vectorizer = require('wink-nlp/utilities/bm25-vectorizer');

const bm25 = BM25Vectorizer();

Logically, we then train the vectorizer on each document:

corpus.forEach( (doc) => bm25.learn(

nlp.readDoc(doc)

.tokens()

.out(its.normal)

)

);

Using the out() method we are now able to access a wide range of useful features, such as:

its.bowit.docBOWArrayits.docTermMatrixits.idfits.termsits.tf

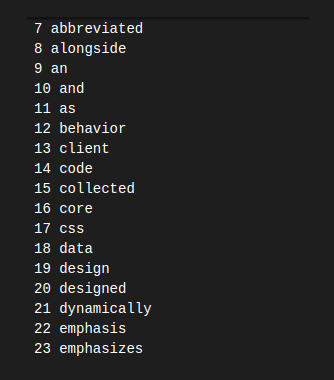

For instance, it.terms will create an array of individual terms within the corpus, sorted alphabetically:

const getUniqueTerms = () => {

let terms = bm25.out(its.terms);

for (let term in terms) {

console.log(term, terms[term])

}

console.log(bm25.out(its.terms));

}

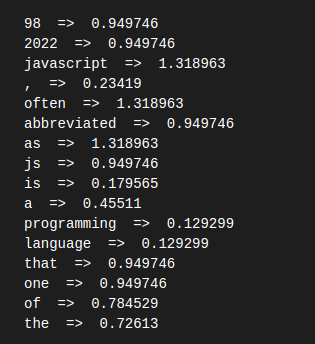

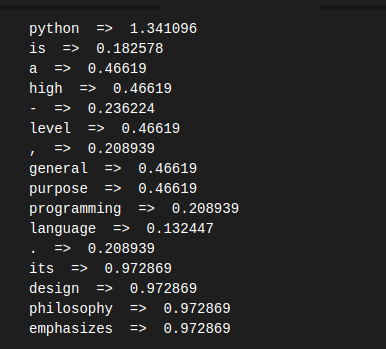

More interestingly, its.bow will create a bag of words model for any of the documents within our corpus. In this case the first document:

const getBowPerDoc = (which) => {

let terms = bm25.doc(which).out(its.bow)

for (let term in terms) {

console.log(term, " => ", terms[term])

}

}

… or across each document:

const getBow = () => {

let terms = bm25.out(its.docBOWArray)

for (term in terms) {

for (t in terms[term]) {

console.log(`${t} => ${terms[term][t]}`)

}

}

}

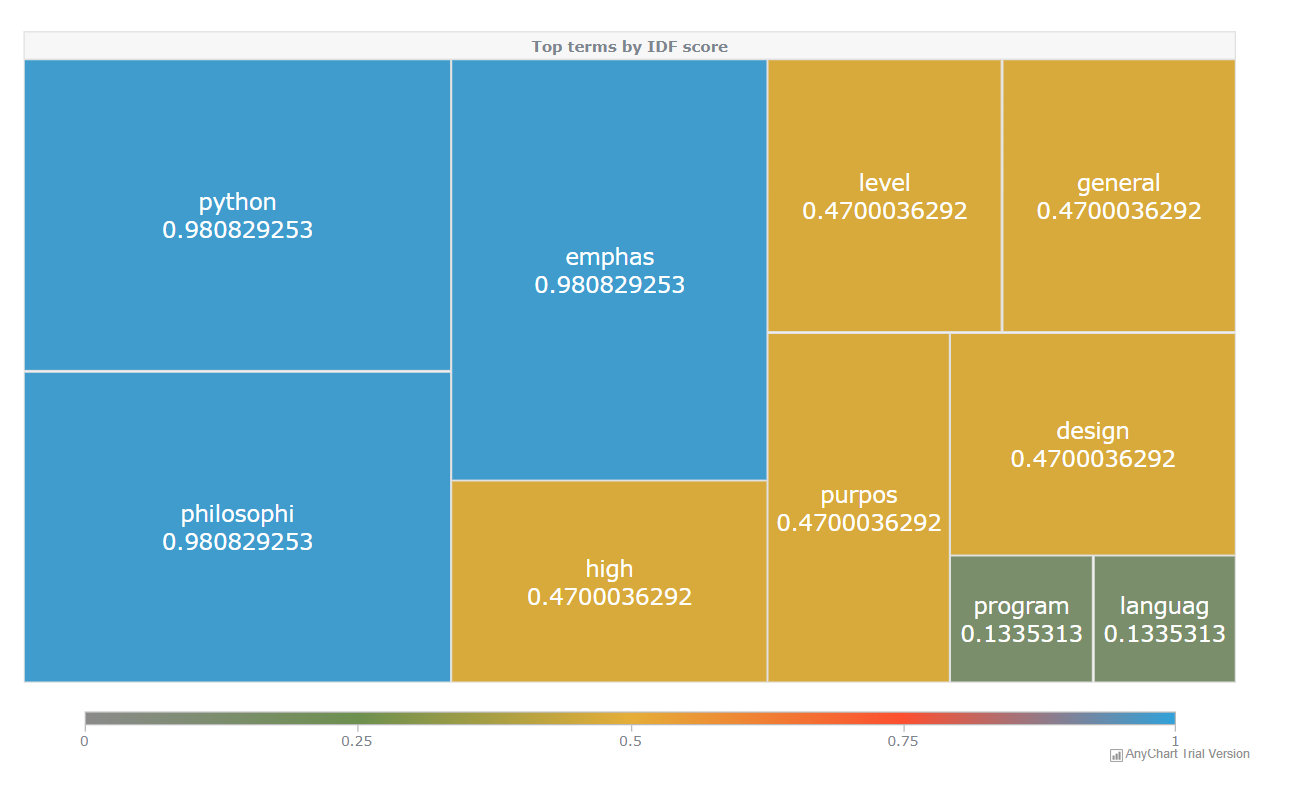

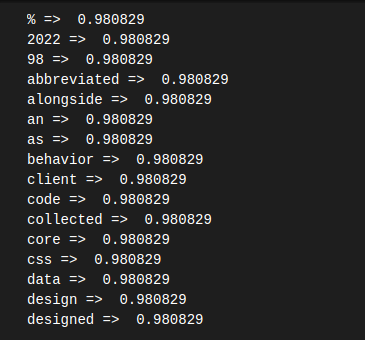

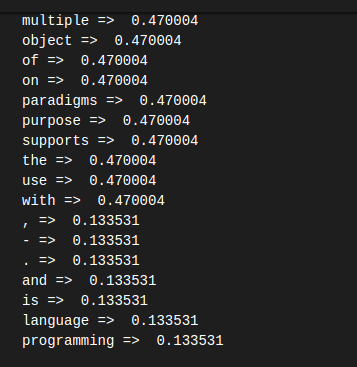

Things however start getting really interesting when we use the its.idf helper to retrieve the IDF score for each term in the whole corpus

const getIdfPerTerm = () => {

let terms = bm25.out(its.idf);

for (term in terms) {

console.log(`${terms[term][0]} => ${terms[term][1]}`)

}

}

Wait, why are we seeing duplicate values for the scores of some of our terms? Well, to be fair, our three documents are quite short, and therefore might not contain enough unique terms for our vectorizer to return a wide range of BM25 scores.

That being said, we can take a reversed approach and try to understand how negatively correlated our documents are. For instance, we can get some fairly interesting insights by observing the terms that have a low score. See for instance how “programming” and “language” have a shared score of 0.133531, as they are showing multiple times across all three documents:

Ok, so our three documents were too short. But that raises another question then: what if our documents are on the contrary too large, or worse, what if they’re of different sizes?

Several approaches can be taken to deal with large or imbalanced textual datasets:

- Using a list of stopwords to filter out unimportant terms and punctuation signs

- Pre-processing the documents to filter out some specific part-of-speech tags. There’s no real consensus within the world of natural language processing as to which tags are worth keeping, but you might want to start with verbs, nouns, and numerals.

In-browser visualisation

What we can do next, is try and see how to plot the terms and their BM25 score using a great JavaScript data visualisation package named AnyChart. If you’re not familiar with this library, it is in my humble opinion one of the most underrated plotting frameworks across all popular programming languages. I personally find AnyChart to be almost as powerful as d3.js, and at least as intuitive as GGplot.

When I say powerful, I mean that AnyChart literally lives up to its name and supports some insane things like GANTT charts for instance.

Back to our BM25 vectorizer, we’re about to find out that things start getting a bit messy when we try to use a client-side version of winkNLP. According to their official website, the only way to use winkNLP in the browser is through bundler tools like Webpack or Browserify.

However, a quick search on Skypack returned a series of client-side packages for the whole winkNLP suite. I initially thought that these had been kindly uploaded there by some charitable soul, before realising that they actually were official releases!

To import them we’ll simply need to embed a <script> tag of type="module" within the <body></body> tags of our html page:

<script type="module">

import winkNlp from 'https://cdn.skypack.dev/wink-nlp';

import winkEngLiteWebModel from 'https://cdn.skypack.dev/wink-eng-lite-web-model';

import anychart from 'https://cdn.skypack.dev/anychart';

import winkBm25TextSearch from 'https://cdn.skypack.dev/wink-bm25-text-search';

const nlp = winkNlp(winkEngLiteWebModel);

let its = nlp.its;

let as = nlp.as;

</script>

I will admit that what left me relatively baffled, is that the syntax for winkNLP’s BM25 vectorizer is different between the Skypack page and the official documentation. In other words, I ran into a lot of errors, and had to rewrite all the Node.js code, as we’re about so see.

Still within the <script></script> tags, let’s paste our three documents, in a slightly different format this time:

let corpus = [

{

"title": "python",

"body": "Python is a high-level, general-purpose programming language. Its design philosophy emphasizes code readability with the use of significant indentation. Python is dynamically-typed and garbage-collected. It supports multiple programming paradigms, including structured, object-oriented and functional programming."

},

{

"title": "javascript",

"body": "JavaScript, often abbreviated as JS, is a programming language that is one of the core technologies of the World Wide Web, alongside HTML and CSS. As of 2022, 98% of websites use JavaScript on the client side for webpage behavior, often incorporating third-party libraries."

},

{

"title": "ruby",

"body": "Ruby is an interpreted, high-level, general-purpose programming language which supports multiple programming paradigms. It was designed with an emphasis on programming productivity and simplicity. In Ruby, everything is an object, including primitive data types."

}

]

As you have probably noticed, our array of strings is gone. Each document now needs to be in a JavaScript Object, and must contain a "title" as well as a "body".

How we pass the corpus through the ranking function is also going to be slightly different, as once we have initialised the BM25 vectorizer, we’ll this time have to use the defineConfig() method as indicated on the Skypack page (we will leave all parameters as default, but will remove all stopwords through chaining methods, as suggested in the official documentation).

const prepTask = function ( text ) {

const tokens = [];

nlp.readDoc(text)

.tokens()

.filter( (t) => ( t.out(its.type) === 'word' && !t.out(its.stopWordFlag) ) )

.each( (t) => tokens.push( (t.out(its.negationFlag)) ? '!' + t.out(its.stem) : t.out(its.stem) ) );

return tokens;

};

let engine = winkBm25TextSearch()

engine.defineConfig( { fldWeights: { title: 1, body:2 } } );

engine.definePrepTasks( [ prepTask ] );

corpus.forEach( function ( doc, i ) {

engine.addDoc( doc, i );

} );

engine.consolidate();

Finally, we can access all the helpers we saw earlier on using the following set of methods:

console.log(engine.getDocs());

console.log(engine.getTokens());

console.log(engine.getIDF());

console.log(engine.getTotalDocs());

console.log(engine.getTotalCorpusLength());

Now, though this is useful, it’s still not exactly what we want. We need to create some simple visualisations for our top terms and scores.

To do so, we’re going to use the following two types of plots:

- a tree map chart, which is some sort of rectangular frame that contains a data tree of terms and their parent-child hierarchy

- a word cloud (or tag cloud as it is called in AnyChart), which is a far more frequent visualisation type. However, I personally am not a big fan of that type of charts which I sometimes find pretty difficult to read

To create a tree map chart, we’ll first have to add a simple <div></div> element within our <body></body> and specify its id= attribute:

<div id="viz"></div>

One this is done, we want to make sure that the chart will be displayed across the whole page, and for that we’ll need to create a css file:

html, body, #viz{

width: 100%;

height: 100%;

margin: 0;

padding: 0;

}

#viz {

float: left;

height: 100%;

width: 50%;

}

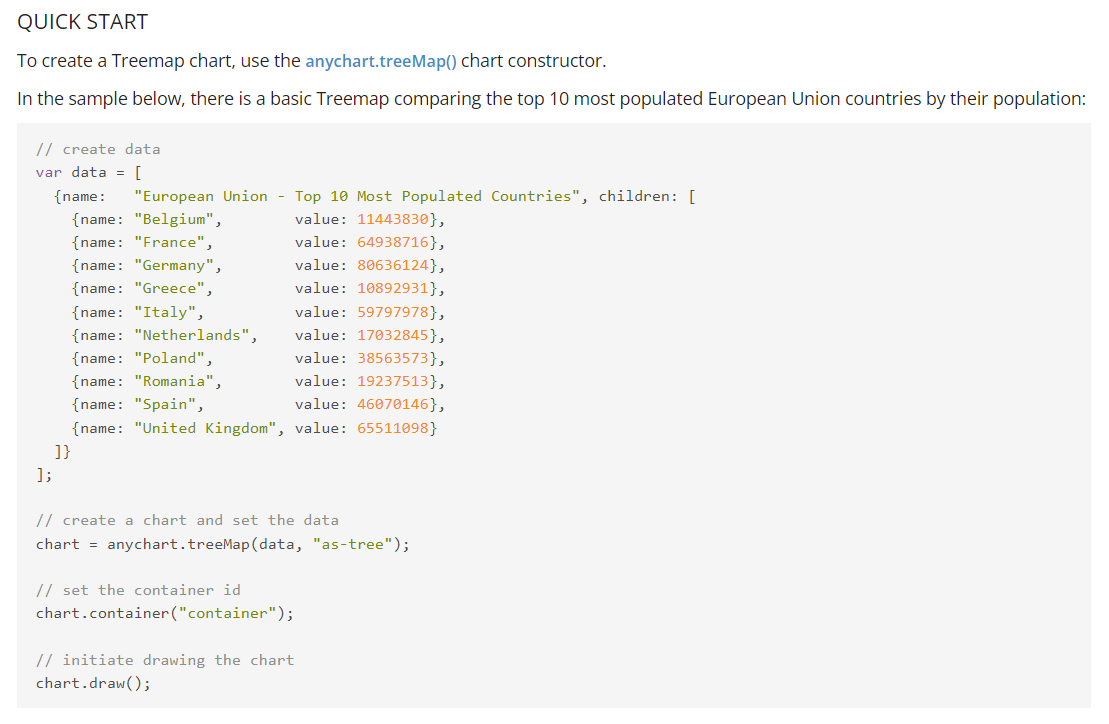

For some reason, to create a tree map, AnyChart requires the data to be in the following format:

So back to our html page, let’s paste the following function right below where we imported the modules:

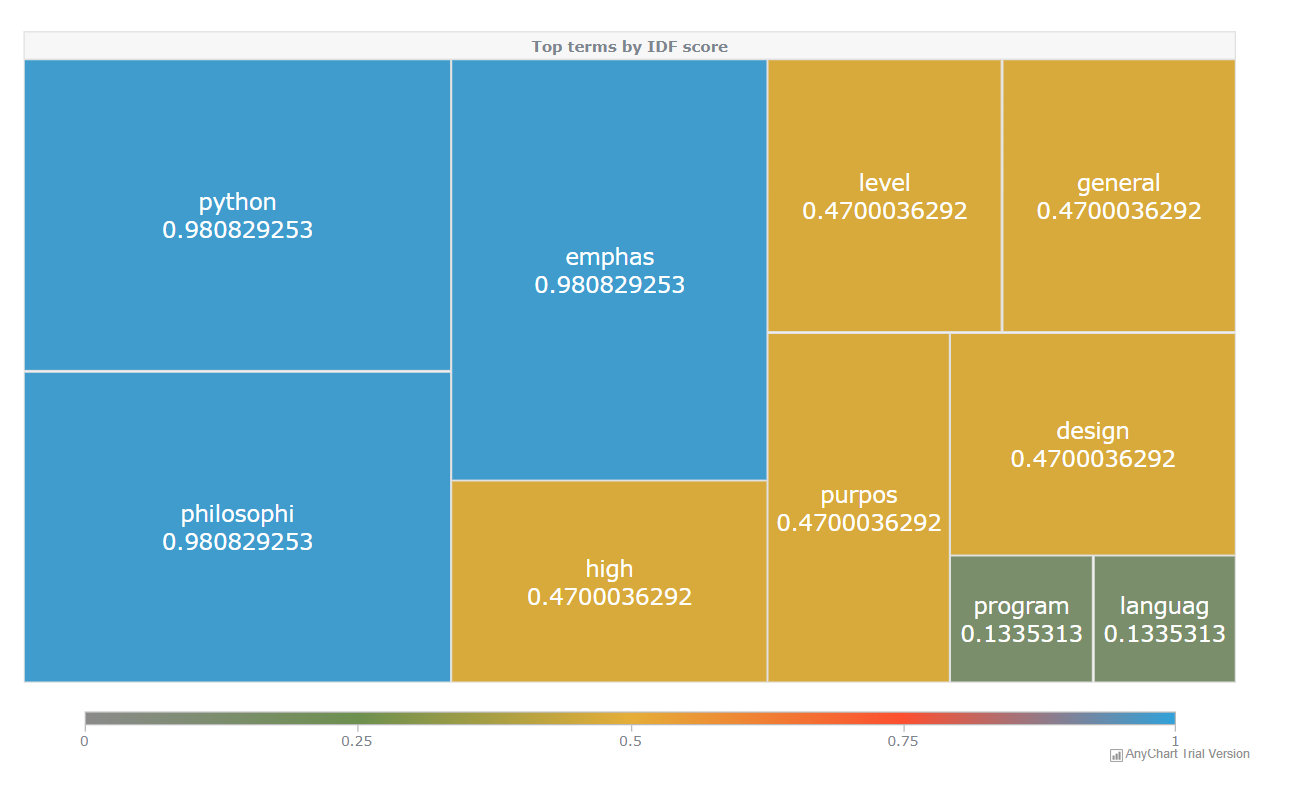

const getTreeMap = (howmany,title) => {

// here we're simply creating an Object that

let term = new Array();

let score = new Array();

let child = new Array();

for (let e in engine.getTokens()) {

term.push(e);

}

for (let e in engine.getIDF()) {

score.push(engine.getIDF()[e])

}

term = term.slice(0,howmany);

score = score.slice(0,howmany);

for (let i = 0; i < term.length; i++) {

child.push({name: term[i], value: score[i]});

}

let result = [ {name: title, children: child} ];

# creating the chart and adding in some customisation elements

let chart = anychart.treeMap(result, "as-tree");

let customColorScale = anychart.scales.linearColor();

customColorScale.colors(["#8b8b8b","#6d904f","#e5ae38","#fc4f30","#30a2da"]);

chart.colorScale(customColorScale);

chart.colorRange().enabled(true);

chart.colorRange().length("90%");

chart.normal().labels().fontSize("18");

chart.normal().labels().fontColor("#FFFFFF");

chart.normal().headers().fontWeight("bold");

chart.container("viz");

chart.draw();

}

getTreeMap(10,"Top terms by IDF score");

The chart we get when refreshing the page is pretty neat! As you can see in the code above, all we had to do was to add in some customisation parameters to make the chart a bit prettier.

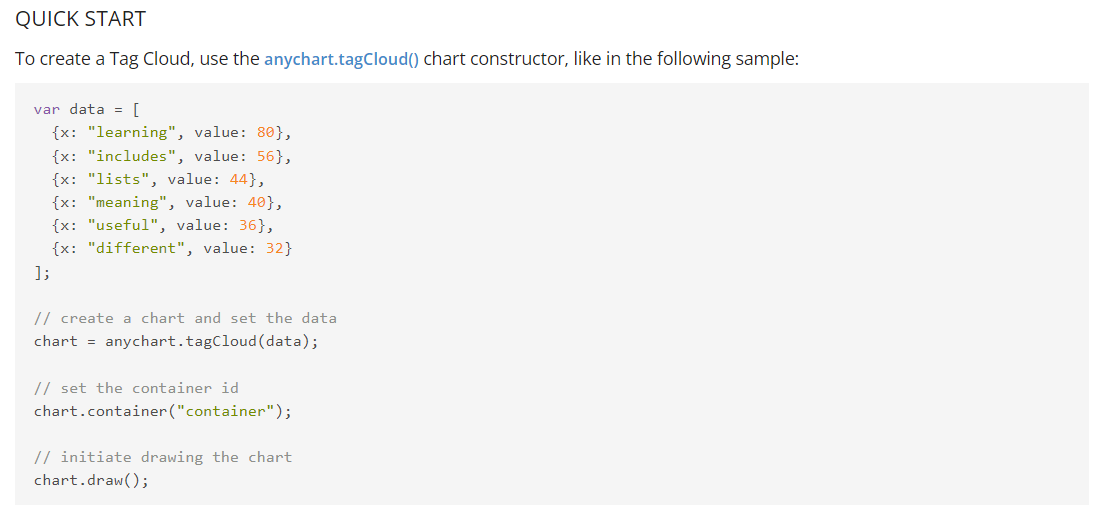

Creating a so-called “tag cloud” isn’t fundamentally much different, though the data has to be in a slightly changed format, as shown on the official documentation:

const getTagCloud = (howmany) => {

let term = new Array();

let score = new Array();

let cloud = new Array();

for (let e in engine.getTokens()) {

term.push(e);

}

for (let e in engine.getIDF()) {

score.push(engine.getIDF()[e])

}

term = term.slice(0,howmany);

score = score.slice(0,howmany);

for (let i = 0; i < term.length; i++) {

cloud.push({x: term[i], value: score[i]});

}

let chart = anychart.tagCloud(cloud);

let customColorScale = anychart.scales.linearColor();

customColorScale.colors(["#8b8b8b","#6d904f","#e5ae38","#fc4f30","#30a2da"]);

chart.fromAngle(10);

chart.toAngle(100);

chart.anglesCount(5);

chart.textSpacing(9);

chart.colorScale(customColorScale);

chart.colorRange().enabled(true);

chart.colorRange().length("100%");

chart.container("viz");

chart.draw();

}

getTagCloud(15,"Test");

Final thoughts

I really like working with winkNLP, and its implementation of the BM25 algorithm definitely offers a strong statistical improvement over the classical TF-IDF approach. Another big plus I forgot to mention, is the speed at which winkNLP is able to process any chunk of unstructured data it is fed:

“The package can handle large amount of raw text at speeds over 525,000 tokens/second.”

I hoped you enjoyed reading this article, and see you soon for some new NLP adventures!